A recent mini-poll reveals, as perhaps expected, that the primary source of information used to measure desktop support issues are the number of support calls hitting the help desk and number of calls successfully resolved. For over three quarters of organisations, these two rather primitive measurements form the foundation of desktop support measurements. Time to resolve calls is the other significant metric.

More specialised metrics based on the type of call are monitored by just a third of operations, with estimates of diagnosis and resolution costs followed by only one in ten organisations. We are still a long way off measuring the real cost of support!

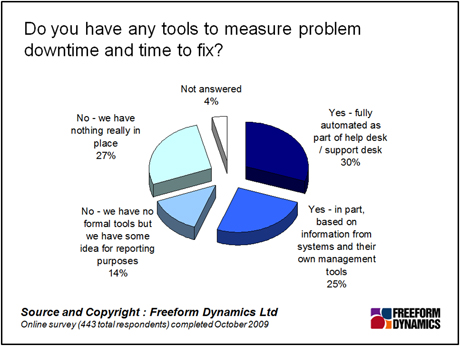

We get a better picture of what tools are employed to measure downtime and time-to-fix processes: a wide range of solutions have been adopted, with automated tracking supplied by support desk tools leading the way.

But even these are used by fewer than one organisation in three. We recognise that responses will have been gathered from organisations of all sizes, with information garnered from more generic systems management tools being used by another 25 per cent. All the same, this leaves over forty per cent reporting that they either have no formal tools in place to measure down time and repair times or that they have simply a general idea of such metrics for reporting purposes.

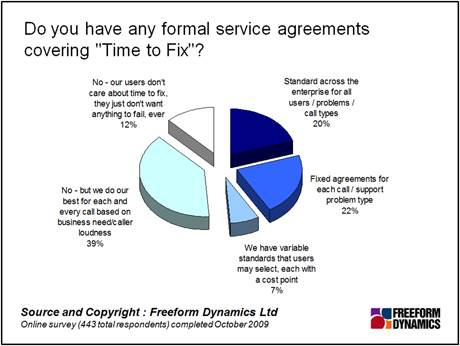

When it comes to having formal service level policies in place covering time to fix operations, 42 per cent of organisations have either standard agreements in place with users, or they make use of agreements based around variable to time to fix dependent on different problem types. Another seven per cent make use of agreements in which users select a time to fix each associated with a defined cost. The largest proportion of support organisations has no formal agreements in place, but attempts to fix each call based on user needs.

Damning with faint praise? Measuring user perceptions is challenging, but it is clear that the primary metric in use is the number of complaints received. This measure of users’ perceptions is counted far more often than the amount of praise received. Nearly half of organisations use internal or external surveys as a guide to user satisfaction with support call handling, while 40 per cent-plus rely on the old standard of “gut feelings”. Around a quarter of respondents make use of some quality management and reporting tools such as end user response time monitoring etc. One in ten do nothing at all.

With these standards in place, an interesting reader comment makes the point: “Why no mention of user satisfaction in the metrics? We added this to our support system a couple of years back and it’s incredibly helpful to see when users are (dis)satisfied with how their issues are dealt with and you get better participation than with a periodic survey. Investigating individual calls with negative feedback has enabled us to tighten up a number of procedures and it’s meant a focus away from volume and ’time to resolve’ both of which generally have little relevance to user satisfaction – which is the aim in providing a support service, isn’t it?”

To conclude, monitoring and measurement of support call resolution times and end users perceptions of the help desk service is a maturing science. Expectation management and having reporting tools that let everyone know how well the support service and the general IT services are operating can help smooth interactions between users and IT. There is still some way to go to identify the best methods to address these areas. Indeed the whole of desktop service delivery remains a work in progress for most organisations.