Ed: AI is figuring more and more prominently in our industry coverage at Freeform Dynamics. During a recent team meeting, Bryan Betts referred to some of the insights he learned from attending Intel’s ‘AI Day’ in San Francisco towards the end of last year. We thought it was worth asking him to write it up properly, and the end result is a great overview of some of the key aspects of how things are shaping up in this space. Enjoy.

For those of us more used to the “Intel Inside” jingle and all the stickers that went with it, the idea of the chip giant also being a big player in AI seems strange. And yet, as the company showed at its very well attended Intel AI Day in San Francisco towards the end of last year, it not only has great plans but it has also already achieved a significant amount.

The key to understanding why it is interested in AI came during a session where Intel execs previewed much of the material that would later appear on the main stage, and it is this: Intel sees AI and AI-supported software being, if not ubiquitous in the future, then almost everywhere. And it wants that software to run not as it does today, on specialist chips such as graphics processors (GPUs) from the likes of Nvidia and AMD, but on mainstream Intel hardware. Hence its investment in Nervana Systems, whose AI hardware is being built into a line of Intel Xeon processors.

This version of AI isn’t what many of us – and certainly many of the older generation – might think of when we hear the term, though. It’s less talking computers and Asimov’s Three Laws of Robotics, and more about analytics, whether that’s for fraud detection, image recognition, or improving your service when a customer phones in by predicting their next request. Although yes, the robots are still around, it’s just that today we call them self-driving cars.

Of course, there are still those working on more general forms of machine intelligence, most notably Google’s DeepMind, but in the meantime there is a lot more that can usefully be done to put AI technology to work today. Some is of direct use to Intel of course – chip manufacturing is hugely complex, and this technology can help recognise process problems, for example. But the main attraction is much broader than that, as one of the execs noted: “We’re in it because if you invest in analytics, you invest in your data centre, and if you invest in your data centre, Intel smiles.”

Deconstructing AI

The other key to understanding what’s going on here is when you realise that what Intel and other researchers call AI is not a discrete ‘thing’, but a collection of related and often interdependent technologies. These would indeed all be needed for building ‘an artificial intelligence’ – a synthetic intellect, if you like – but they are also useful in many other ways that are more immediate.

An example is the chatbot, whether it’s the automated support tool that a SaaS vendor proudly touts as AI-enabling its cloud services, the legal advice system for fighting parking tickets, or the fake users that some of the less-scrupulous online dating services set up to trick the lonely into spending extra cash (the adult relationship and ’entertainment’ industry has always been an early adopter of new technologies). These all use AI-derived or related technologies such as machine learning and natural language processing, but are only a subset of AI in total.

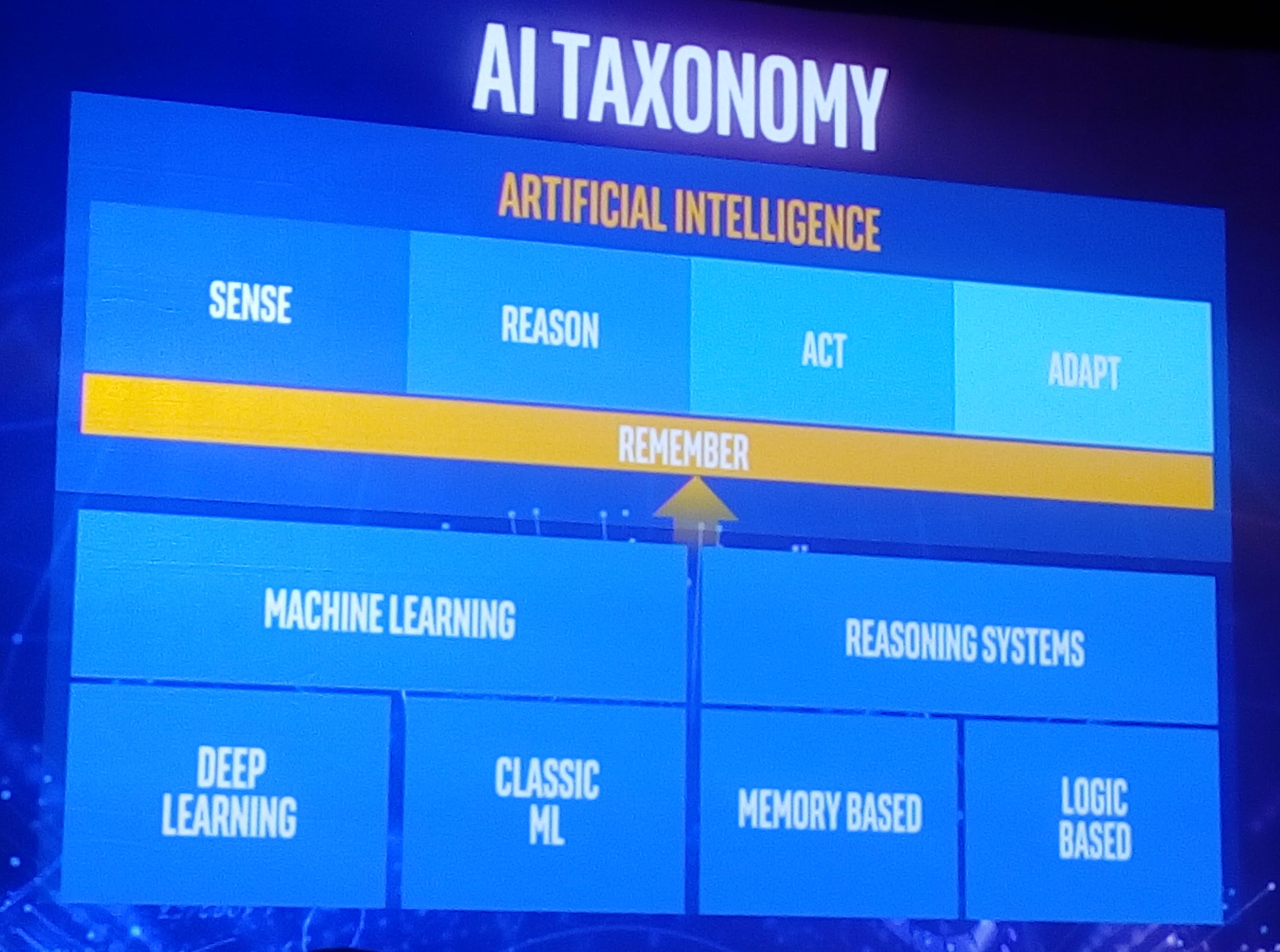

A useful thing at this point might be a taxonomy – here’s one I snapped at AI Day. “Sense, reason, act & adapt” is Intel’s shorthand for what AI technology is really about, and it’s a useful one. Essentially it’s all about the system taking masses of data, trying to make sense of it by constructing a model, applying that model to more incoming world data, and then using its findings to adapt the model (with or without human guidance, depending on the type of learning in use) when it gets it right or wrong.

Upstreaming

There was much talk about “end to end solutions”, “democratising AI” and “compressing the innovation cycle”, and a bit of wordplay in “AI on IA” (the latter meaning Intel Architecture, or how its processors are structured and programmed). However, a rare case of a buzzword actually saying something informative was upstreaming, a term Intel execs used for the process of pushing resources to and supporting the developers building the underlying software tools.

In particular, that means contributing time and effort to open-source projects, even though the resulting software will be free – hence Intel’s claim to be a top contributor to Linux, Apache Spark and Hadoop. Among other things, it also means optimising the performance on IA of popular AI libraries and frameworks such as Caffe, Theano, Torch and Nervana’s Neon, cooperating on Google’s TensorFlow machine learning library, and adding deep neural network (DNN) primitives to Intel’s widely-used MKL maths library.

The argument – and it’s a strong one – is that providing the fundamentals as open-source enables more users to try things out faster and more economically, and therefore should boost development across the whole field.

There are questions arising, of course. For instance, how truly open is your open-source if it’s heavily optimised for your hardware, and how might developers react to that? For now at least, Intel is probably OK here – it is the software layer that most open-source supporters worry about most, and they’ll use whichever hardware gets their job done fastest.

AI in silicon

As I mentioned, Intel is already using AI-based analytics in its factories; it also owns AI-as-a-service provider Saffron Technology, which specialises in reasoning systems (see the taxonomy above). However, the real pay-off for the company will come if and when the widespread adoption of AI technology enables it to sell bucketloads of high-priced processor chips. It has two horses in this race: Altera, which it cooperated with before acquiring it in 2015, and Nervana Systems, which it bought in 2016.

Among other things, Altera develops reprogrammable chips called FPGAs. Not only can these run AI applications at hardware speed (e.g. speech recognition and translation, network threat analysis, even playing video games), but their reprogrammable nature means that machine learning software can literally reprogram the chip as it learns. Intel has launched the socket-compatible Xeon E5 ‘Skylake’ processor that also has an Altera FPGA built-in.

Nervana, on the other hand, specialises in deep learning. Although it initially ran its deep learning SaaS platform on Nvidia GPUs, it subsequently developed its own hardware acceleration engine for deep learning which it claims outperforms Nvidia ten-fold. Intel is now preparing to launch this in chip form in 2017 under the code-name Lake Crest.

One problem which both Nervana and the GPU developers have is that their devices are not bootable. That means they have to reside within a server; this runs the host operating system but is otherwise just burning power. So just as it did with Altera, Intel is also building the Nervana technology into its Xeon Phi processor line, starting with a model code-named Knights Crest. It claims that with all these optimisations, its bootable AI-enhanced processors will by 2020 enable deep learning systems to be trained one-hundred times faster than 2016’s fastest systems.

The wider picture

Intel’s spending to get AI on IA is a gamble, but not an over-reaching one. There’s a lot of opportunity in these technologies, as others have also demonstrated, for example IBM with its Watson AI business unit. Besides the obvious support for human decision making, sample AI case studies include research into weather forecasting, the genomics behind cancer and the early diagnosis of eye disease, the demographics of magazine readers, and a project that helps find missing children by sifting through a mass of reports and rumours too big for any normal human to embrace.

What this shows is that machine learning and reasoning are the next steps beyond big data. These AI techniques take that mass of data and try to learn from it on our behalf – typically by deriving complex models that may explain the data, testing them against more data, and then adapting the model to try again.

In other words, if you do anything with big data – or indeed with complex decision making of any sort – you ignore AI at your peril. It is a complex field to get started in, but fortunately there is a fair bit of education and training around, including online academic courses (and Intel is adding yet more, with its Intel Nervana AI Academy).

Bryan Betts is sadly no longing with us. He worked as an analyst at Freeform Dynamics between July 2016 and February 2024, when he tragically passed away following an unexpected illness. We are proud to continue to host Bryan’s work as a tribute to his great contribution to the IT industry.

Have You Read This?

From Barcode Scanning to Smart Data Capture

Beyond the Barcode: Smart Data Capture

The Evolving Role of Converged Infrastructure in Modern IT

Evaluating the Potential of Hyper-Converged Storage

Kubernetes as an enterprise multi-cloud enabler

A CX perspective on the Contact Centre

Automation of SAP Master Data Management

Tackling the software skills crunch