As anyone who has started down the path will confirm, desktop virtualisation is not a single thing – rather it offers a number of ways of modernising the management of the desktop environment, either in its entirety, or for specific user types.

It’s not just about giving everyone dumb(ish) terminals and stuffing everything else into the data centre; advances in chipset design and management capabilities allow you to combine virtual technologies with “real” client computing, which we have documented here. So are organisations planning to implement any of the desktop virtualisation options available, and how do we make a business case for any solution?

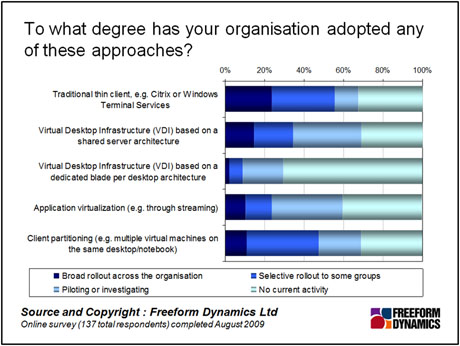

To kick things off, we know from readers of The Reg that understanding of the various desktop virtualisation options available is patchy. With so many vendors using the same terminology for a range of different technologies, just using the term “desktop virtualisation” is confusing. The chart below, taken from research

we undertook last summer, shows which options are attracting attention.

Given that the respondents to the above survey were readers of the Register, it is not surprising to see that client partitioning usage was high: desktop virtualisation systems offer significant benefits for IT professionals.

As for server-based technologies, the long established thin client/terminal services approach is most widely employed; meanwhile VDI based on a shared server architecture, perhaps the desktop virtualisation term that attracts the most attention, is in active use, ahead of application virtualisation.

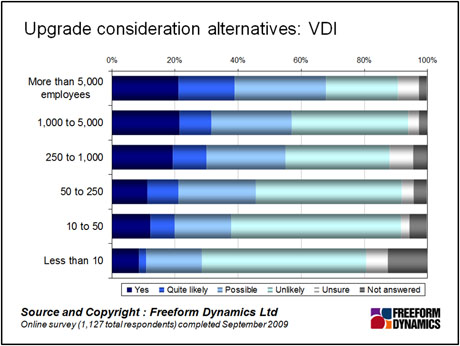

A second survey

, carried out more recently, looked at VDI specifically: the results are shown below. With many organisations actively looking at refreshing desktop estates, VDI is attracting serious attention – especially among larger enterprises. In organisations with more than 5,000 employees, the research shows that more than half are considering VDI. Even in the smallest organisations, more than 20 per cent are considering VDI rather than a like-for-like desktop refresh.

Despite this level of interest, we hear from IT professionals who have started desktop virtualisation projects that making the business case can be problematic. To resolve this, note that the basics are the same as for any such project: first work out what users you have, what they are using, and their usage patterns. From this it should be possible to identify which user groups are suitable candidates for desktop virtualisation, and which approach suits best. It is absolutely essential to gather this core data if you want to avoid any unexpected cost implications later, when overlooked users or systems show up.

Step two is then to establish expected project costs, preferably with someone who has real-world experience of a similar project, so that they can be compared with the costs of a standard desktop refresh. It is worthwhile trying to include the costs of “ongoing management, support and system recovery” of both, which are often overlooked. With the consumerisation of devices in enterprise use, and more users bringing equipment into business – iPads are currently a good example – support costs for locked down environments using desktop virtualisation are likely to be lower. This, though, comes at the expense of flexibility. Getting hold of data to illustrate this trade-off may take some effort, but it could be compelling when working out the business case for desktop virtualisation.

Early adopters tell us that it is also valuable to look at the server, storage and networking systems required to deliver a desktop virtualisation infrastructure that meets the service levels that users expect. Specifically, server-based desktop virtualisation approaches such as VDI require the whole infrastructure to work together, which can be a change for IT professionals familiar with standalone desktop environments that can at least function should there be any problems at the back end.

The last step: make sure that migration planning is included in the project specifications, as this can have a dramatic impact on project timescales, professional services costs and user satisfaction – whichever option is undertaken.

With this information available, explaining the business case can begin, but recognise that some reports tell us that potential desktop virtualisation projects will still be difficult to justify using standard three-year investment lifecycles. Early adopters have pointed out that several of the benefits from using some form of desktop virtualisation can be difficult to quantify in terms of money, even if the business benefits are clear. The decision making process is likely to involve a lot of discussion all round, taking more factors into account than simple cost savings.