At the beginning of the ‘Cloud’ movement, vendors, evangelists, visionaries and forecasters were often heard proclaiming that eventually all IT services would end up running in the public cloud, not in the data centres owned and operated by enterprises themselves. Our research at the time, along with that of several others, showed that the reality was somewhat different: the majority of organisations said they expected to continue operating IT services from their own data centres and from those of dedicated partners and hosters, even as they put certain workloads into the public cloud.

More recent research by Freeform Dynamics illustrates that this expectation – running IT services both from in-house operated data centres, and from public cloud sites – is now very much an accepted mode of operation. Indeed, it is what we conveniently term “hybrid cloud” (Figure 1).

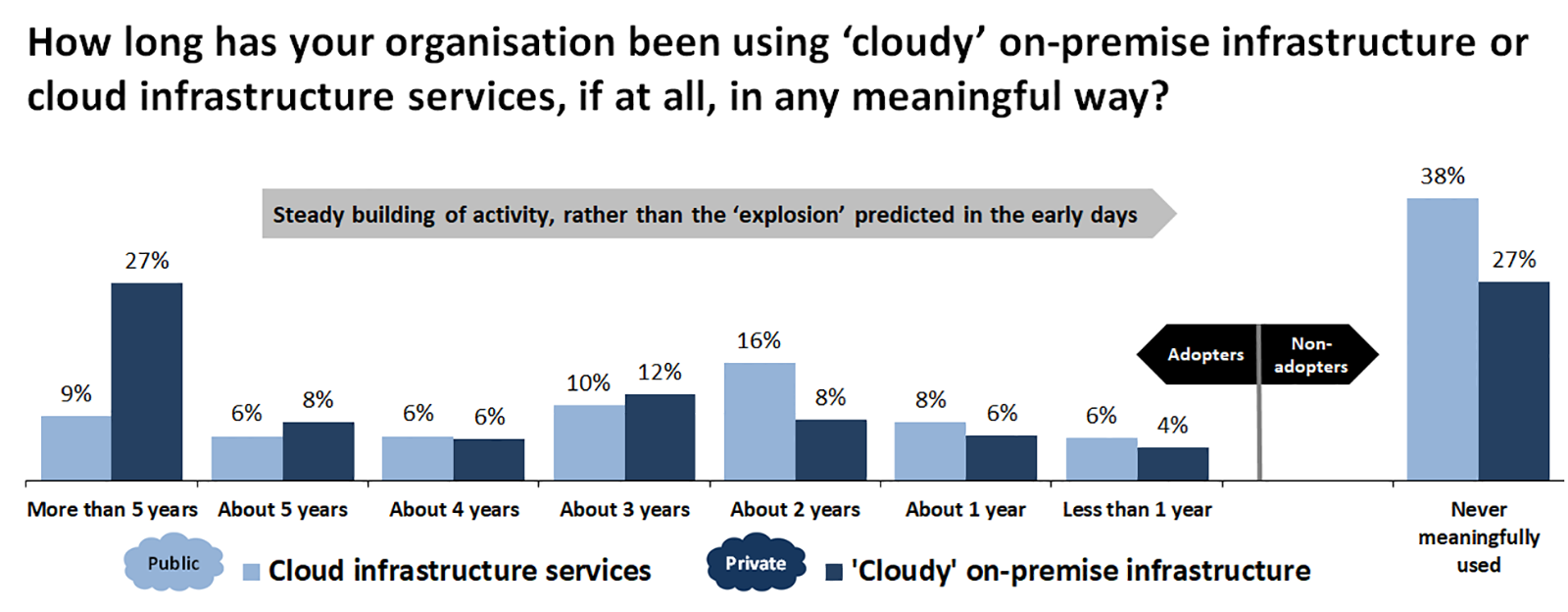

The chart illustrates very clearly that over the course of the last five years almost three-quarters of organisations have already deployed, at least to some degree, internal systems that operate with characteristics similar to those found in public cloud services, i.e. they have deployed private clouds. Over the same period, just under two-thirds of those taking part in the survey stated that they already use public cloud systems. It is interesting to note that both private and public cloud usage has grown steadily rather than explosively, but this is not surprising given the pressures under which IT works, and that the adoption of any “new” offering takes time. Especially if the systems will be expected to support business applications rather than those requiring lower levels of quality or resilience (Figure 2).

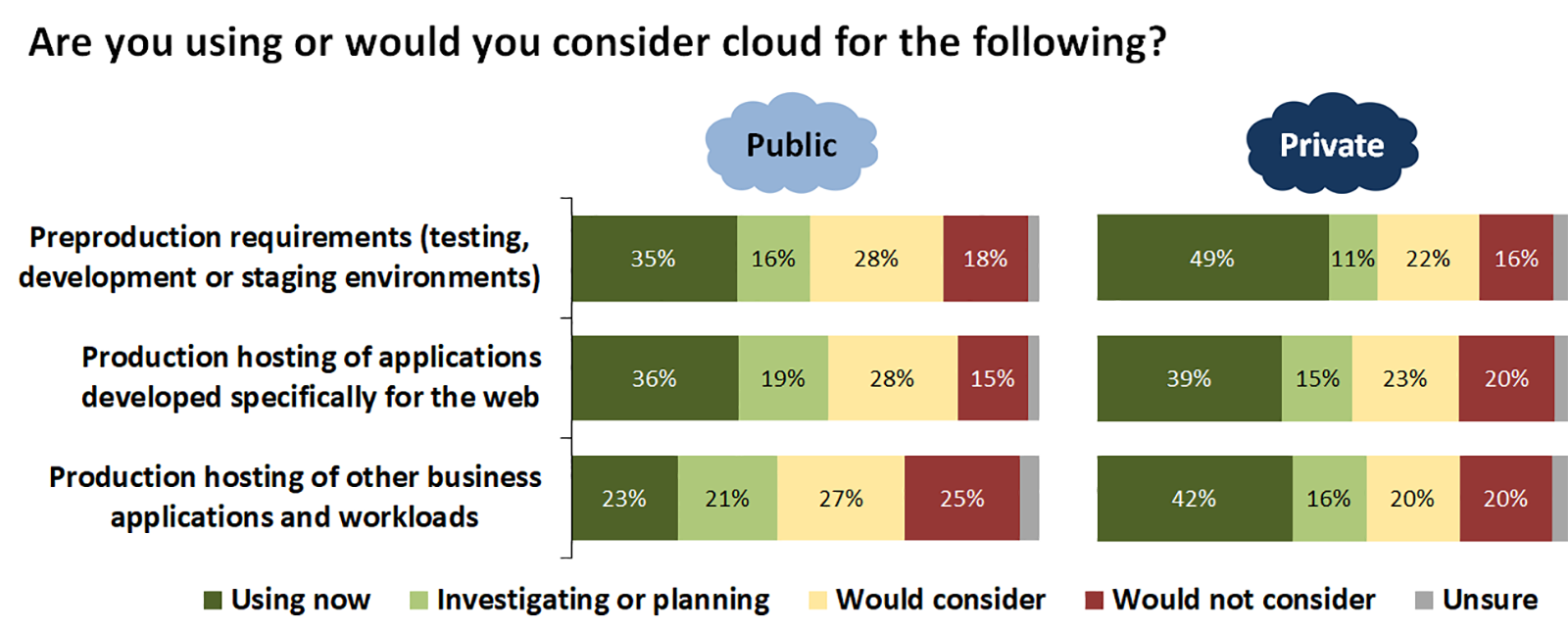

The second chart shows that for a majority of organisations, private cloud is already in use or will be supporting production business workloads in the near future. The adoption of public cloud to run such workloads clearly lags behind, but its eventual usage is only out of the question for around a quarter of respondents. When combined with the results for test/dev and the production hosting of applications and services developed specifically for the web, the picture of a hybrid cloud future for IT is unmistakable.

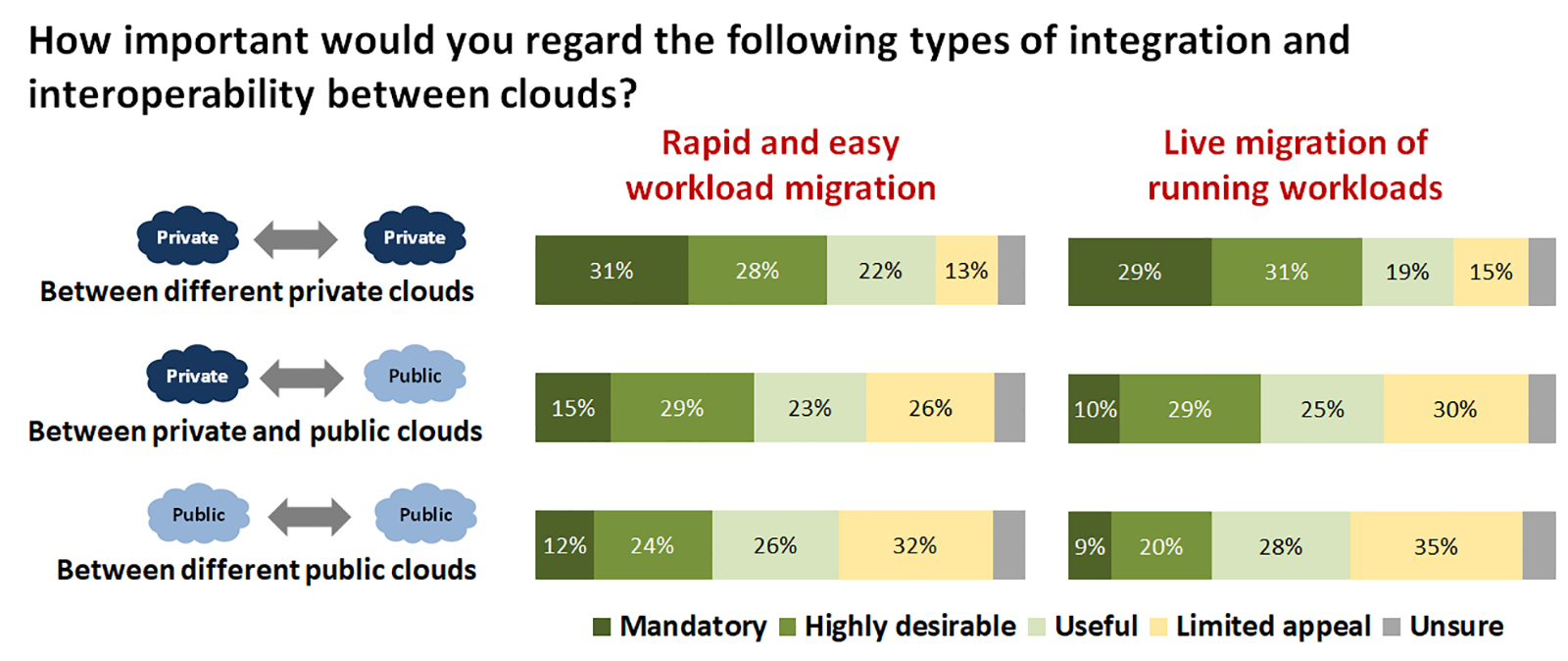

But if ‘hybrid IT’ is to become more than just a case of independently operating some services on internally owned and operated data centre equipment and others on public cloud infrastructure, the survey points out some key characteristics that must form part of the management picture. (Figure 3).

The results in this figure highlight several key requirements that must be met around the movement of workloads if ‘hybrid cloud’ is to become more than a marketing buzzword. Given that private clouds are today used more extensively to support business applications than public clouds, there should be little surprise that smoothing the movement of workloads between different private clouds is ranked as important, or at least useful, by around four out of five respondents.

But the chart also indicates a recognition of the need to move workloads smoothly between private clouds running in the organisation’s own data centres and those of public cloud providers. And almost as many answered similarly about the need to be able to migrate workloads between different public clouds. The importance of these integration and interoperation capabilities is easy to understand: they are essential if we want to achieve the promise of cloud, in particular the ability to rapidly and easily provision and deprovision services, and the ability to dynamically support changing workloads coupled with hyper scalability to ease peak resource challenges and enhance service quality.

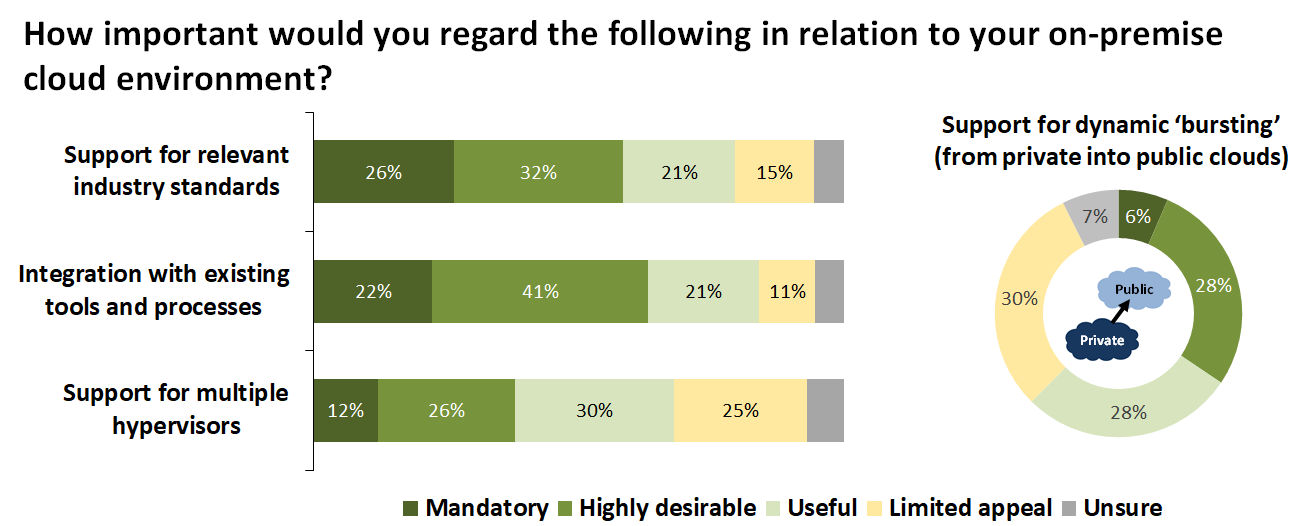

How quickly such capabilities can be delivered depends on a number of factors (Figure 4.)

The need for the industry to adopt common standards is clear and, to its credit, things are beginning to move in this direction although there is still much work to be done. The same can be said for integrating cloud services with the existing management tools with which organisations keep things running, although, once again, things do need to improve especially in terms of visibility and monitoring.

The Bottom Line

The days of vendors building gated citadels to keep out the competition and keep hold of customers should be coming to an end, as many – though alas not all – are under pressure to supply better interoperability. In truth, while interoperability does make it easier for organisations to move away, such capabilities are also attractive and can act as an incentive to use a service.

After all, no one likes the idea of vendor lock-in, and anything that removes or at least minimises such fear can help smooth the entire sales cycle. In addition, if a supplier makes interoperability simple via adopting standards, being open and making workload migration straightforward, they then have an excellent incentive to keep service quality up and prices competitive.