By Andrew Buss

The world of business is becoming faster moving, more competitive and ever more dependent on IT. Transactions and interactions between suppliers, the channel and even customers that have traditionally been handled manually have become largely electronic. Much of the day-to-day operations and communications of the business rely on IT services to function, and failures have the potential to bring activities to a grinding halt.

With so many areas of the modern business now highly dependent on IT, it will come as no great surprise that the old saying ‘if there is one constant in IT, it is that change is constant’ is more true today than ever before. Yet most IT departments struggle to deliver when the business asks for something changed or new. This may be frustrating more than anything for IT and the business today. But this is likely to become a lot more acute in the future as the business comes under more pressure in the marketplace and a raft of external services such as SaaS and Public Cloud put the performance of IT in the spotlight.

Fragmentation is the rule rather than the exception

In order to remain healthy and viable to enable the business to thrive, the datacentre of the future will need to be dynamic and able to reconfigure to changing needs much more rapidly and seamlessly than today. This increased flexibility will depend on all parts of the datacentre – from the applications and servers through storage and networking, being part of an integrated whole and being able to pull their weight when it comes to change.

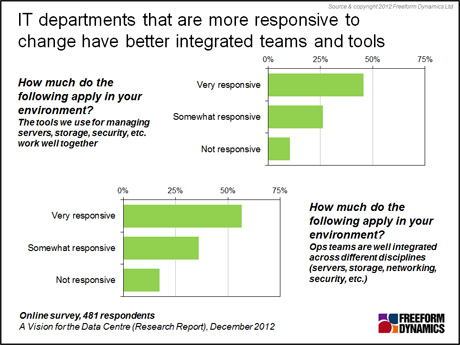

Yet in many companies that we speak to, these critical infrastructure elements are usually procured, managed and run separately, resulting in a fragmented infrastructure and significant, and often unrecognised, burdens. If we look at how the IT organisations that are most responsive to change organise things, the tendency is towards tighter integration (Figure 1).

Figure 1

Turning this situation around so that the datacentre infrastructure is much more converged and better integrated has the potential to bring a lot of benefits, but it is a big job that is easier said than done. However, by recognising the problem and tackling it in stages, a lot of benefits can be realised, and fairly early on too.

Virtualisation is changing how IT needs to be run

For most companies that we survey, what IT does is typically defined by thinking about implementing and running individual systems and applications. In other words, both IT and the business typically think in terms of specific servers or software applications, such as an Exchange Server, rather than a generic service, such as Email, being delivered.

This worked reasonably if not perfectly when systems were pretty much static, and each could be individually architected and optimised for a task. But time and technology have moved on. Virtualisation has taken hold, first in servers and now increasingly in both storage and networking too.

What was once stable and predictable has become dynamic and a lot more complex and variable. Trying to run IT by doing things in the usual way may work, for a while at least. But as more use is made of virtualisation, the problems usually begin to rapidly mount up and the situation may end up just as challenging, but with a different set of problems to overcome.

So, while virtualisation is an important enabler, it isn’t in itself enough to create an integrated environment so you can start to tackle the fragmentation in a more sustainable manner.

Think services, not systems

One of the best places to start when it comes to integrating and improving the IT infrastructure is to turn things around from thinking about individual systems, to instead thinking about the services that the business requires. Our research shows that the IT organisations that take this type of approach generally become better aligned with the needs of the business, are more responsive to changing requirements and also tend to have higher levels of end-user and business management satisfaction with IT.

Developing a service led mind-set can require some pretty fundamental changes and may appear daunting at first. But often it is really about having the confidence to just get started on the journey. In other words, start small and then build the capability over time. Many companies don’t even have a basic catalogue of services, and as a result lack even fundamental visibility into what is involved in delivering a service or the ability to troubleshoot it when something goes wrong. Just documenting important services, even if it is in a basic tool such as Excel or Visio, can start to deliver tangible benefits in understanding and optimising day-to-day operations.

As service delivery becomes more familiar and natural, it makes sense to become more formal at managing it. Dedicated tools can help with this, and the choice has grown in the last few years. There are integrated tools that come with servers and systems, through to third party offerings that may require more investment and integration but also ultimately more capability.

Developing a service centric approach can pay many dividends, but one of the big benefits is that when the business requests something new, the discussion should be about the expected outcome, not the delivery mechanism. In other words, the focus should be on how many users are supported, what response times are acceptable and how much downtime is accepted rather than which processor or how much memory is in a particular box.

This can then help to break the link that seems to naturally develop between business budget holders, dedicated infrastructure, and the on-going operation of the various systems by the IT department. These links are greatly responsible for the emergence of silos in the datacentre and get in the way of being able to make the case for a shared, dynamic infrastructure.

Getting different teams to talk to each other

One of the major barriers to a more dynamic and responsive IT infrastructure, and ultimately visions like private cloud, is that the applications, servers, storage and networking are usually bought, implemented and operated in siloes that are independent from each other. This may work well for each particular silo from a technology point of view, but unless they work together towards the common goal of supporting the business, many of the potential benefits are likely to be lost.

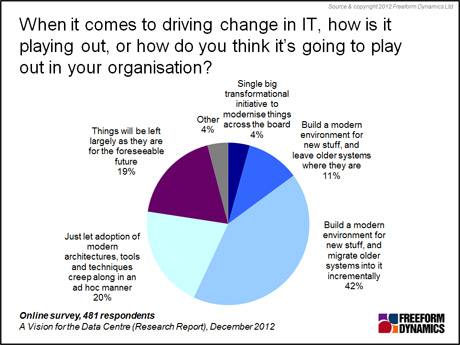

What this means is that sooner or later, the individual elements that make up the IT infrastructure need to be unified so that they fit and work well together. While some companies can afford to do this all at once as part of a transformation initiative, the reality is that the vast majority of companies cannot do this, and instead need to work on incrementally improving what they have in place already – across the teams, the tools and the infrastructure (Figure 2).

Figure 2

One of the highest priorities is to get what is already in place working well together. While it might be tempting to think that buying some new technology may help here, often a good place to start is to try to break down some of the operations barriers by getting the different teams responsible for servers, storage and networking to interact more closely together.

Content Contributors: Andrew Buss