High-Performance Computing (HPC) has traditionally been seen as the domain of the über-specialist.

It’s as close as the IT industry will ever get to “2 Fast, 2 Furious” – gangs of highly technical experts pushing their custom-built computers to the limit with an aim to win that ultimate prize, a place in the world supercomputing rankings. No doubt, there will be some blue neon thrown in too.

Meanwhile of course, mainstream application requirements are becoming equally demanding, for example in high-end business intelligence. As a consequence the use of higher-performance computing platforms is becoming more important in ‘routine’ business operations. To determine whether the gap was closing between traditional HPC and more run-of-the-mill high-end computing, we ran a Reg survey and gathered information from 254 respondents, the majority IT professionals and systems architects from a mix of industries and company sizes.

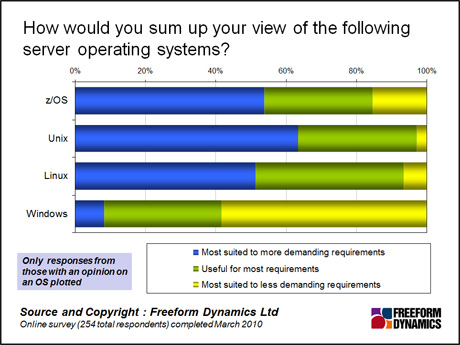

The first thing we were concerned with, was what’s out there in terms of technology? As we can see in the figure, the results highlighted the high degree of confidence that traditional enterprise server operating systems hold as high-performance work horses. UNIX, Linux and z/OS are all seen positively for the majority of requirements, high-performance or otherwise.

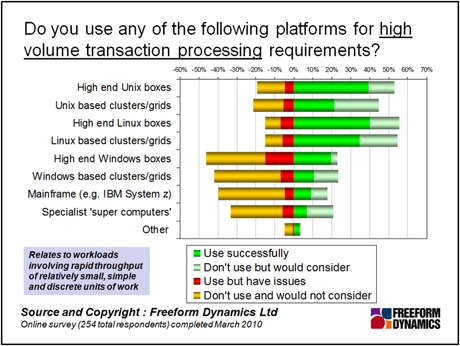

Microsoft’s Windows is a comparatively new entrant but is already seen as potentially useful by almost half of respondents, giving organisations another option when assessing which platform to deploy for such workloads. We say ‘potentially’ because it isn’t without its issues, as illustrated – but it still has more of a footprint than either mainframe or ‘specialist’ super computer equipment.

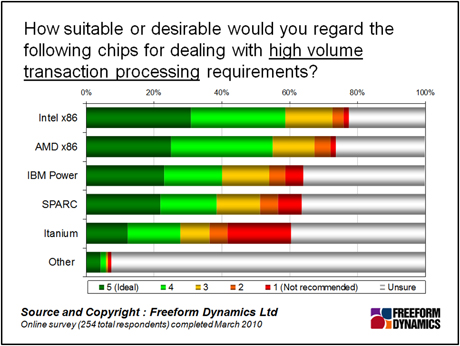

This nod towards more commodity operating systems is also illustrated when we look at the chipsets. Commercially available chip sets now dominate the HPC markets displacing specialist suppliers, as Intel and AMD x86 offerings have taken over in terms of perception of their suitability to handle such workloads. The Power chipset from IBM is also recognised as a suitable platform by just over 40 percent of respondents again reflecting its long history in this space; the Sun/Fujitsu (or should that be Oracle/Fujitsu?) SPARC is also well regarded.

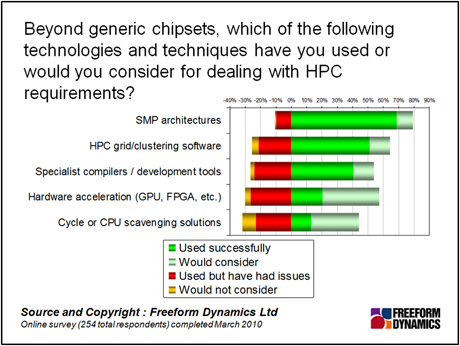

While the trend may be towards commodity chipsets and operating systems, HPC still brings a number of facilities to the party – not least how systems are architected using symmetric multi-processing, for example, or bringing in specialist compilers and hardware acceleration features. While respondents were generally positive about such features, note also that all options have also been reported to pose challenges. It is unlikely that specialist skill sets will diminish any time soon for the leading edge of HPC, as reflected by the respondents who said that the requirements of HPC will continue to demand systems tuned explicitly to compute-intensive workloads.

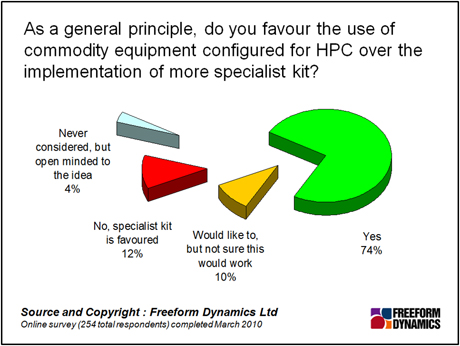

Despite the confirmed ongoing need for more specialist additions, a clear drive exists towards commodity-based high-performance computing for more mainstream applications. The use of ‘commodity’ equipment is seen as the best approach to building HPC systems with three out of four favouring the use of such equipment versus ‘specialist’ kit. Of the remaining respondents some 14 percent are either open to idea of using commodity equipment or would like to do so but would need guidance on whether it would work for them. Only one organisation in eight actively favours using specialist equipment in their HPC operations.

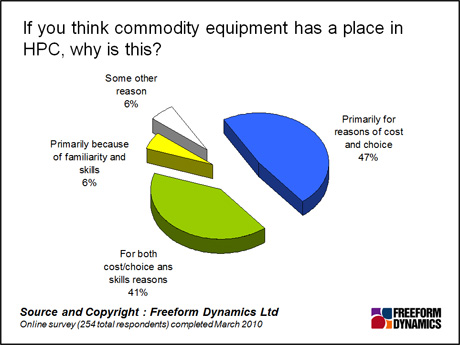

Familiarity, cost and choice emerge as the reasons – the jury is out as to what is most important but the general responses come down to ‘the devil you know’. Commodity chipsets have clearly crossed a threshold where they are powerful enough, and configurable enough, to render less relevant any additional benefits that might come from depending on more specialist platforms for example. There is also evidence (not shown) of an interest in the potential to utilise these ‘commodity’ based systems and generic operating systems together with virtualisation techniques, to allow equipment to be switched back and forth between HPC and non-HPC activity, with obvious cost benefits.

With the potential business demand for higher-performance computing platforms likely to grow, it is probable that increasing attention will be focussed on using more commodity-based platforms as time goes on, with a particular focus on ease of deployment and operation, and the associated total cost of ownership (TCO). This suggests a virtuous circle: as more use is made of such platforms, so do we expect to see high-performance computing become increasingly accessible and more straightforward to deploy, which can only benefit the business community in general. For more information on this research, you can download the full report here.