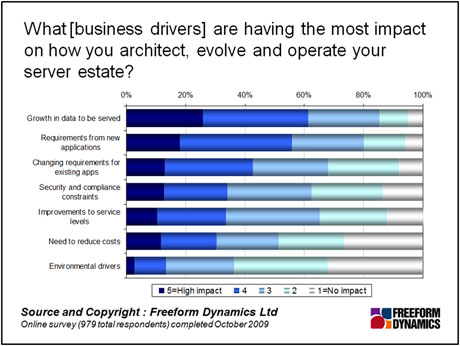

Nothing stands still forever, particularly not in IT, and with good reason. When we researched the drivers that were having the most impact on how x86 server environments are architected, evolved and operated for example, we found that data growth was the number one driver, followed closely by new application requirements, and then changing requirements from existing applications (Figure 1).

Of course there is more to the average data centre than x86 servers, but the same drivers will have an impact across all hardware platforms. Given that change is inevitable, what approaches work best in minimising its impact?

Let’s consider first the causes of change in more detail. “Data growth” is that continuing consequence of the information age, and a good indicator of how far technology still has to go. Moore’s Law is only just keeping up with our insatiable appetite for data processing, which is unlikely to rest until every single molecule in the universe has been mapped out, and every possible transaction is logged in minute detail. Every time we think we have found “the answer”, be it the latest CRM system, videoconferencing facility or whatever, new ways of using technology kick in, driving new sets of requirements, which can only sometimes be supported immediately by our existing application base.

Larger companies may have a thousand or so applications they see as core, with several thousand more running across the organisation, not all of which will be known about by IT. Smaller businesses will also be familiar with issues caused by having a broad set of applications, such as not knowing who is using what. The consequence is that it sometimes appears easier to start from scratch with a new application, than to try to work out whether there is anything already in place that could be adopted, repurposed or built upon.

In the mean time, while some existing business applications will require little or no major change (upgrades aside) throughout their lifespan, others will. In particular are those which are closely linked to customer-facing activities. Insurance claims-management systems, web site back-end systems or tax applications are good examples of systems that demand change on very fast timescales. Equally, we are seeing increasing demand for software that delivers business services and information in a more palatable form – everything from Flash and Silverlight front ends, web scripting and of course “there’s an app for that” on ‘smarter’ mobile devices. The end result is that software applications, small and large will continue to evolve and proliferate.

From an application management standpoint, attempting to deal with the challenge is akin to “managing” a rainforest. It would be impossible to keep control over every single tree, plant, animal and insect, and that is not necessarily the best way to proceed given that the rainforest probably does a reasonably good job of looking after itself, at the root and branch level. Rather, it is a case of balancing efforts, managing the subset of things that benefit from being managed, and leaving the rest to manage themselves.

In practical terms this does go back to principles such as portfolio management

but, given the rate of change, the question becomes how to decide what’s in and what’s out?

Applications that may appear to be very important now may be obsolete, or just irrelevant, in six months’ time. In our conversations with IT decision makers, a consensus is emerging about not trying to manage everything – it is more important to have a set of gating factors to determine when a given application is business-critical enough to be proactively supported – not least, the costs of both the technology, and its operational management and support.

Just as nothing stands still forever, so all good things come to an end, and it is important for senior IT decision makers to have a clear picture about when applications no longer merit to be formally supported. Not every organisation will be courageous enough to “shoot the dogs”

when they no longer perform as they used to, not least because of the politics around decommissioning. However, it should certainly be possible to remove under-used applications from the rosters of front line operations. And indeed, only a modicum of visibility into the costs of the technology involved is sufficient to offer the opportunity to business departments to pay for ongoing licensing and support themselves, if they are insistent on keeping an application running beyond its sell-by date.

There may come a point at which you feel the need to undertake a more strategic application rationalisation exercise, with all the challenges (for example, around data management

) that may be involved. You are more likely to arrive at this point because you have to, rather than because you want to – however, such crisis moments can also be treated as opportunities to get the house in order, by taking a step back from the application portfolio as a whole, and considering its value to the business as compared to the true costs of maintaining and supporting individual systems.

In the meantime however, change management in the context of applications is a case of striking a balance between full-on, formal management of core systems, and taking a rational view of the broader, unsupported application portfolio. If you have any specific tips about how to tell one from the other, do let us know.

Content Contributors: Jon Collins

Through our research and insights, we help bridge the gap between technology buyers and sellers.

Have You Read This?

Generative AI Checkpoint

From Barcode Scanning to Smart Data Capture

Beyond the Barcode: Smart Data Capture

The Evolving Role of Converged Infrastructure in Modern IT

Evaluating the Potential of Hyper-Converged Storage

Kubernetes as an enterprise multi-cloud enabler

A CX perspective on the Contact Centre

Automation of SAP Master Data Management